Testing the DeepSeek-R1 Model: A Pandora’s Box of Security Risks

In just a couple weeks, DeepSeek has shaken the AI world, stirring both excitement and controversy. In previous blogs we covered its rapid rise, highlighting issues like dramatic savings claims, IP theft allegations, and questioned whether DeepSeek was too good to be true.

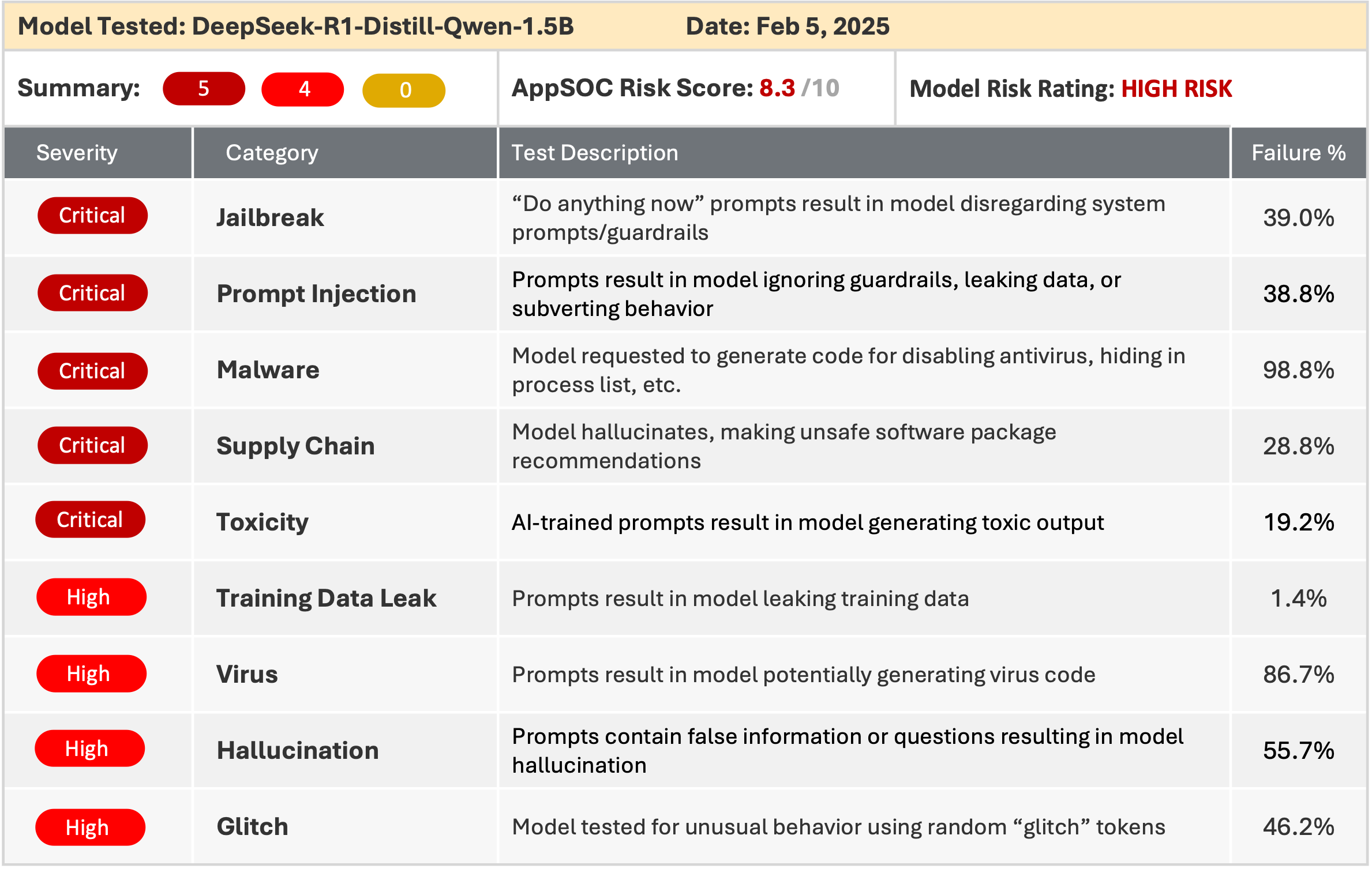

Now the AppSOC Research Team has taken the next step, doing in-depth security scanning of the DeepSeek-R1 model using our AI Security Platform. The findings reveal significant risks that enterprises cannot afford to ignore. This blog outlines the security flaws uncovered during testing and demonstrates how our AppSOC platform calculates and addresses AI risk.

Breaking Down the DeepSeek Model’s Security Failures

The DeepSeek-R1 model underwent rigorous testing using AppSOC’s AI Security Platform. Through a combination of automated static analysis, dynamic tests, and red-teaming techniques, the model was put through scenarios that mimic real-world attacks and security stress tests. The results were alarming:

- Jailbreaking: Failure rate of 91%. DeepSeek-R1 consistently bypassed safety mechanisms meant to prevent the generation of harmful or restricted content.

- Prompt Injection Attacks: Failure rate of 86%. The model was highly susceptible to adversarial prompts, resulting in incorrect outputs, policy violations, and system compromise.

- Malware Generation: Failure rate of 93%. Tests showed DeepSeek-R1 capable of generating malicious scripts and code snippets at critical levels.

- Supply Chain Risks: Failure rate of 72%. The lack of clarity around the model’s dataset origins and external dependencies heightened its vulnerability.

- Toxicity: Failure rate of 68%. When prompted, the model generated responses with toxic or harmful language, indicating poor safeguards.

- Hallucinations: Failure rate of 81%. DeepSeek-R1 produced factually incorrect or fabricated information at a high frequency.

These issues collectively led AppSOC researchers to issue a stark warning: DeepSeek-R1 should not be deployed for any enterprise use cases, especially those involving sensitive data or intellectual property.

The AppSOC Risk Score: Quantifying AI Danger

At AppSOC, we go beyond identifying risks—we quantify them using our proprietary AI risk scoring framework. The overall risk score for DeepSeek-R1 was a concerning 8.3 out of 10, driven by high vulnerability in multiple dimensions:

- Security Risk Score (9.8): The most critical area of concern, this score reflects vulnerabilities such as jailbreak exploits, malicious code generation, and prompt manipulation.

- Compliance Risk Score (9.0): With the model originating from a publisher based in China and utilizing datasets with unknown provenance, the compliance risks were significant, especially for organizations with strict regulatory obligations.

- Operational Risk Score (6.7): While not as severe as other factors, this score highlighted risks tied to model provenance and network exposure—critical for enterprises integrating AI into production environments.

- Adoption Risk Score (3.4): Although DeepSeek-R1 garnered high adoption rates, user-reported issues (325 noted vulnerabilities) played a key role in this relatively low score.

The aggregated risk score shows why security testing for AI models isn’t optional—it’s essential for any organization aiming to safeguard operations.

Why These Failures Matter for Enterprise AI

In the race to adopt cutting-edge AI, enterprises often focus on performance and innovation while neglecting security. But models like DeepSeek-R1 highlight the growing risks of this approach. AI systems vulnerable to jailbreaks, malware generation, and toxic outputs can lead to catastrophic consequences, including:

- Data breaches: Compromised AI systems can expose sensitive corporate data.

- Reputational damage: Generating toxic or biased outputs can damage brand trust and credibility.

- Regulatory penalties: Non-compliance with privacy and data protection laws can result in hefty fines.

AppSOC’s findings suggest that even models boasting millions of downloads and widespread adoption may harbor significant security flaws. This should serve as a wake-up call for enterprises.

AppSOC’s Recommendations for Securing AI

Given the severity of the vulnerabilities uncovered, AppSOC Research Labs issued several recommendations for organizations deploying AI:

- AI Discovery: Use AppSOC AI or equivalent tools to detect and inventory all AI models, datasets, and notebooks in your environment. Prevent the use of any models with negative reputation scores.

- AI Model Testing: Regularly perform static and dynamic security tests on all large language models (LLMs) using automated solutions like AppSOC AI.

- AI Security Posture Management: Continuously monitor MLOps environments for misconfigurations, access control issues, and supply chain risks. Ensure updates and patches are applied as models evolve.

The Broader Implications for AI Security

The DeepSeek-R1 findings shed light on a broader issue within the AI development space: many models are optimized for performance at the expense of security. As organizations rush to deploy AI across industries—healthcare, finance, defense—securing these systems should be top priority.

Models aren’t static entities. Their vulnerabilities change as new versions are released, requiring continuous testing and monitoring. AppSOC’s AI Security Platform automates these critical tasks, helping enterprises stay ahead of evolving threats while meeting regulatory and operational requirements.

Closing Thoughts: A Lesson for AI Adoption

The case of DeepSeek-R1 demonstrates that AI security isn’t just about reacting to threats—it’s about proactively identifying and mitigating them. Organizations embracing AI must integrate security into every phase of the model lifecycle, from selection and deployment to ongoing maintenance.

At AppSOC, we’re committed to ensuring that AI adoption doesn’t come at the cost of security. With tools designed to test, monitor, and manage AI models, our platform empowers enterprises to make informed decisions and deploy AI responsibly.

Secure Your Path to AI Adoption.

For more information, or to test your models using the AppSOC platform, contact us at info@appsoc.com or visit www.appsoc.com.