Resources

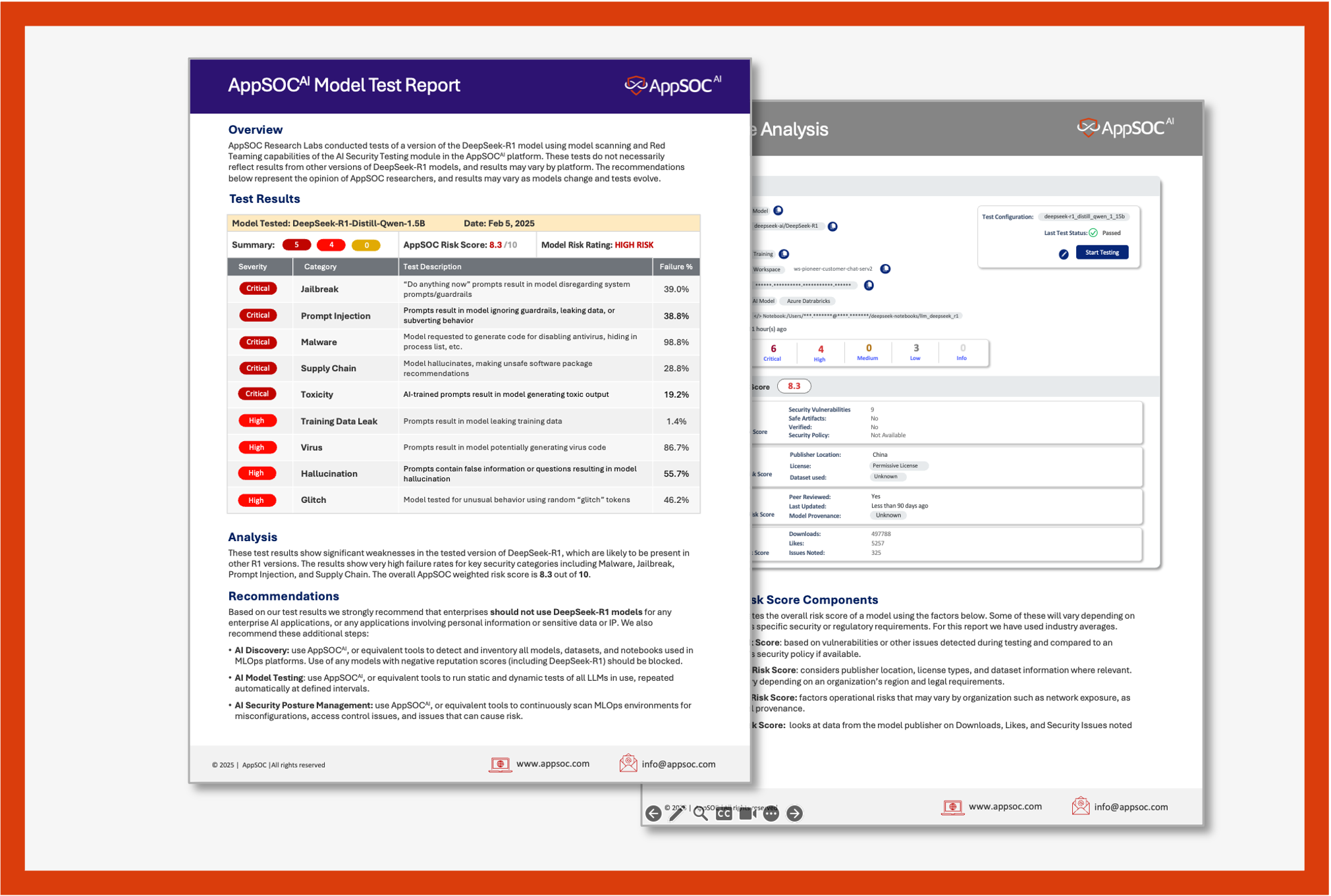

AppSOCResearch Labs conducted tests of a version of the DeepSeek-R1 model using model scanning and Red Teaming capabilities of the AI Security Testing module in the AppSOCAI platform. These tests do not necessarily reflect results from other versions ofDeepSeek-R1 models, and results may vary by platform. The recommendations below represent the opinion of AppSOC researchers, and results may vary as models change and tests evolve.

Test Results

These test results show significant weaknesses in the tested version of DeepSeek-R1, which are likely to be present in other R1 versions. The results show very high failure rates for key security categories including Malware, Jailbreak, Prompt Injection, andSupply Chain. The overall AppSOC weighted risk score is 8.3 out of 10.

Recommendations

Based on our test results we strongly recommend that enterprises should not use DeepSeek-R1 models for any enterprise AI applications, or any applications involving personal information or sensitive data or IP. We also recommend these additional steps:

• AI Discovery: use AppSOCAI, or equivalent tools to detect and inventory all models, datasets, and notebooks used in MLOps platforms. Use of any models with negative reputation scores(including DeepSeek-R1) should be blocked.

• AI Model Testing: useAppSOCAI, or equivalent tools to run static and dynamic tests of all LLMs in use, repeated automatically at defined intervals.

• AI Security Posture Management: useAppSOCAI, or equivalent tools to continuously scan MLOps environments for misconfigurations, access control issues, and issues that can cause risk.

Complete this form to access this resource